|

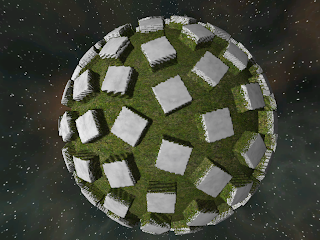

| Simple skyscraper buildings produced procedurally |

|

| Voxel castle produced by the new procedural building system |

The system is inspired primarily by the work of Müller, Wonka, Haegler, Ulmer and Van Gool in their paper Procedural Modeling of Buildings where they present a grammar based system for the specification of buildings (See Miguel Cepero's Procedural World blog for another interpretation of this work), this essentially enables a set of rules to be written which when interpreted by a parser results in geometry being created representing the building for rendering.

Language Choice

I wanted the system to be easy to play with so it was essential that the runtime be able to reload and parse these rules dynamically without having to recompile or even re-run the program so coding them statically into the C++ source was out, but rather than write my own parser I decided to make use of the Lua language and integrated that into my project instead. This gives me an out of the box solution for runtime loading and parsing and provides a far more powerful language feature set than I could hope to achieve in any home grown system.

Lua also has the advantage of being a model of simplicity to integrate with C++, syntactically similar enough to be quick to learn and well supported with documentation and tutorials on the internet. There are even various editing environments with support such as Notepad++ and Sublime providing out of the box syntax highlighting (there is also a Visual Studio extension to provide Lua syntax highlighting although at this time it appears to not support the 2012 edition I am using).

The Fundamentals

In essence, I am representing each type of building or structure with a Lua file, when a building of that type needs to be generated the Lua file is loaded and an entry point function called "Axiom" executed. From here on the Lua environment has control making it's entire feature set available to richly describe the shape and construction of the building. The output of this process is a Signed Distance Field (SDF, i.e. a 3D array of signed distance values) that can then be plugged in to the sparse voxel octree builder to produce a data structure the GPU ray caster can process to render images such as the castle above.

I expose a number of C++ functions to the Lua environment (22 at this time) that the script can call to perform useful intermediate processing operations and to output the final primitives that the SDF is constructed from.

The fundamental concept the script operates on at any one time is a 3D bounding box called a Scope, when the Axiom function of the script is called there are globals called xPos, yPos, zPos and xSize, ySize, zSize pre-defined with the 3D position and size of the current scope respectively which can be used to either emit an output shape or create additional scopes for further processing. Each additional scope created is given a name which is the name of the Lua function to call to produce it's contents. When that function is called it will in turn have the position and size globals pre-set to represent it's own position and size thus allowing each function to act on it's own area of 3D space without having to worry about where it came from or what transforms it's parents have been through.

Learning by Example

If that sounds confusing, a simple example might help:

function Axiom()

Scope(0, 4, 0, xSize,ySize-4,zSize, "Castle");

Scope(0,0,0, xSize, 4, zSize, "Ground");

end

here the main Axiom function is taking the original scope it's been given (which encompasses the entire 3D bounds of the building to start with) and creating two further scopes for later processing. The first is called "Castle" and is at position (0, 4, 0) with the same size as the global scope in the X and Z axis but four metres shorter than the global scope in the Y axis.The second scope it creates is called "Ground" and is at the origin of the current global scope (the origin is in the minimum corner of the box in (x,y,z) space) with the global scope's size in X and Z but is four metres tall in Y (the height). Essentially this is dividing the total space of the building into two horizontal slices, a 4m tall one at the base which will become the ground and a second sitting on top of that into which the building proper will be generated. The global scope is automatically destroyed when the function exits as it's not needed any more.

As it stands this script won't actually output anything, we need to add more code so it knows what to do with those two new scopes:

function Castle()

Box(5, 0, 5, xSize-10, ySize, zSize-10);

end

function Ground()

Box();

end

note here how the name of the scope is simply the name of the Lua function to call - a great advantage of a dynamic language like Lua that would be impossible in C++. In this simple example I've made the Castle function output a box 10m smaller than but centred within the scope in the X and Z axes and the full height of the scope in Y while the Ground function simple creates a box that fills the scope.Note the handy shortcut for the ground's box - having a shape fill the current scope is a common requirement so overloadings of many functions such as Box are provided that assume the origin and scope size as defaults.

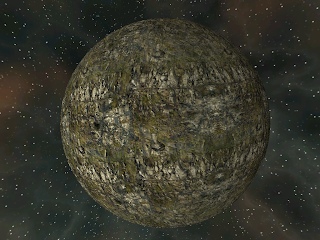

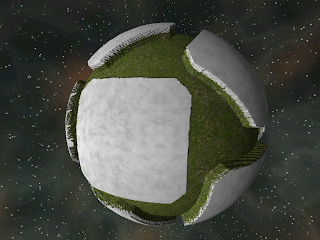

Running this simple script now produces this:

not perhaps the most convincing of castles but hopefully you can see how the script produces what you see here. It's important to remember though that it's not geometry that's being generated - there are no vertices and triangles being created by that Box function; instead for each point in the 256x256x256 SDF grid I'm using in the viewer it's calculating how far that point is from the surface of the boxes and storing that.

The brick and stone texturing is a result of the default appearance set when scripts are run, appearances are essentially a pair of textures one of which is used for flat surfaces and another for vertical ones - surface in between receive a blend of the two textures depending on their slope. It is possible to specify different appearances when creating shapes but I'll let these examples stick to the default for now.

A Slightly More Complex Example

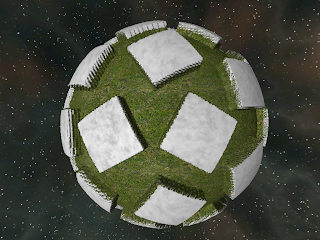

I don't want to turn this post into a reference manual for the construction system as that would be quite verbose and probably not terribly interesting so I'll jump straight forward to a more advanced example, making a tower with a crenelated top:

and here is the code that produced it:

function Axiom()

Scope(xSize/4, 4, zSize/4, xSize/2,ySize-4,zSize/2, "SquareTower");

Scope(0,0,0, xSize, 4, zSize, "Ground");

end

-- Produces a square tower with a crenelated top

function SquareTower()

Box(xSize, ySize-3, zSize); -- Body of square tower

SquareBoundary(merlonDepth, ySize-3, 3, xSize, zSize, "WallRampart");

end

-- Produces four long thin scopes forming a square boundary edge

function SquareBoundary(thickness, yPos, height, xSize, zSize, scopeName)

Size(thickness, height, zSize);

Scope(0, yPos, 0, scopeName);

RotateToY(180);

Scope(xSize, yPos, zSize, scopeName);

Size(thickness, height, xSize);

RotateToY(90);

Scope(xSize, yPos, 0, scopeName);

RotateToY(270);

Scope(0, yPos, zSize, scopeName);

RotateToY(0);

end

-- Produces a low wall topped by crenelations

function WallRampart()

Box(xSize, ySize*0.5, zSize);

Size(xSize, ySize*0.5, zSize+crenelWidth);

MoveTo(0, ySize*0.5, 0);

Appearance("StoneWall");

SubDivZ({ "rep", merlonWidth, "Merlon" });

end

-- Produces a single merlon

function Merlon()

Box(xSize, ySize, merlonWidth-crenelWidth);

end

hopefully it's length doesn't scare you off, assuming you're still with me you can see that the basic structure is the same; functions are often creating new scopes at a given position relative to their parents and based on the parent scope's size. Points that I think are worth noticing include:

- The SquareBoundary() function shows how other Lua functions can be called just like normal to do additional work and allow code re-use without having to create intermediate scopes

- The RotateToY() function demonstrates rotating scopes, all translation and rotations are inherited automatically to child scopes

- The Appearance() function is used here to change the appearance for subsequent shapes, in this case changing to an appearance that doesn't have the rock floor texture so we don't get rock on the flat top surfaces of the merlons.

- The SubDivZ() function illustrates a more powerful way of subdividing space, in this case creating as many instances of the Merlon scope with a given width as will fit along the Z axis of the current scope.

At the moment the system is only capable of generating two basic geometric solids from it's scopes, either the boxes you've already seen or cylinders along the X, Y or Z axes. These basic shapes can be combined however to create far more interesting shapes using a technique called Constructive Solid Geometry (CSG) where the results are combined using one of a range of boolean operators.

There are three primary operators available:

|

| Union is the default which merges the shapes together |

function Axiom() Box(0, 0, zSize/4, xSize/2, ySize/2, zSize/2); CylinderZ(xSize/4, 0, 0, xSize/2, ySize, zSize); end

|

| Subtract removes the second shape from the first |

function Axiom() Box(0, 0, zSize/4, xSize/2, ySize/2, zSize/2); CylinderZ(xSize/4, 0, 0, xSize/2, ySize, zSize, CSG_Subtract); end

|

| Intersect produces the portion of solid that is common to both |

function Axiom() Box(0, 0, zSize/4, xSize/2, ySize/2, zSize/2); CylinderZ(xSize/4, 0, 0, xSize/2, ySize, zSize, CSG_Intersect); end

CSG has been around for a long time and I've used it before on CPU based ray tracer projects where it's relatively straightforward to implement operating on the intersecting spans of rays, but it's a whole lot more complicated when operating on geometry as the finite numerical precision of floating point numbers often leads to problematic fragments and slivers of geometry, problems with non-sealed hulls and issues with coincident surfaces.

Dealing with SDFs however eliminates these problems as the sample space is regular with no problems of open or closed surfaces. (See Iñigo Quilez's page on Modeling with Distance Functions if you are interested in this)

In addition to the regular boolean operators, working with SDFs provides some other interesting options:

|

| Adding signed distances together to create a blend |

function Axiom() Box(0, 0, zSize/4, xSize/2, ySize/2, zSize/2); CylinderZ(xSize/4, 0, 0, xSize/2, ySize, zSize, CSG_Add); end

here a basic addition is being applied resulting in a blend between the box and the cylinder shapes, likewise subtraction and other arithmetic operations can be used.

Advanced Parameters

The flexibility of Lua also makes it simple to add additional optional parameters to function calls. By allowing a table to be passed at scope and shape creation time context specific values can be given:

CylinderY(0, ySize-10, 0, xSize, 10, zSize, { topRadius=0; appearance="RoofTilesBrown" });

here the cylinder has been given a zero top radius factor to turn it into a cone and the appearance for the shape changed to a brown roof tile effect producing the top of the castle's turret:

|

| Turret roof formed by a cylinder with zero top radius |

Finally it's also perfectly possible to apply arbitrary functions to areas of the SDF in much the same way as the geometric primitives do, but instead of generating values we can modify the values already present to produce interesting or bizarre effects that would be difficult to achieve with geometry directly (or at least without massively tessellated geometry)

NoiseShape(-4, -4, -4, xSize+8, ySize+8, zSize+8, CSG_Add, { noiseScale=0.5, noiseAmplitude=1.5 } );

here a Noise modifier has been applied to an area of the scope to warp the values already generated for the keep:

|

| Regular keep without the modifier applied |

|

| Same keep with the Noise() modifier applied just for fun |

Variations on a Theme

So far everything presented here has been undeniably procedural being based on rules and parameters but it has also been entirely deterministic. If I feed one of these Lua scripts into the system five times I will get exactly the same building out each and every time which is going to make it somewhat tedious to produce the wide variety of buildings and structures with which I hope to populate my voxel worlds.

The real power of procedural systems though of course is their ability to produce endless variations of something by supplying different input parameters or seeds. For my building system there are only two primary inputs that dictate how the final architecturee looks: the first is the bounding box for the building comprised of the size of the plot onto which the building must fit and the height it's allowed to reach. These would be defined by the higher level town or city planning systems driven by factors such as population density, building type and the distance from the centre of the conurbation, the bounds are simply passed to the Axiom function as the size of the root scope. By writing the Lua script properly it can be made to work with a wide variety of overall building sizes, functions such as SubDiv() are really useful here to enable repetition along unknown length regions without undue scaling or stretching.

The second factor that has a more significant impact on the form of the building is the seed value that is used to initialise the random number generator. This is calculated from the position of the building on the planet so the same building in the same spot will always look the same but the same script file used to generate a building at a different location may look completely different. The Rand() function is used in the script to make decisions that can change the architecture: deciding whether to put a square tower, a round turret or nothing at all on a corner of the castle for example or whether there is a gateway on a given wall. Rand() can also be used to vary the size of scopes and shapes, so for example once the choice of tower or turret is made it's width and height can be further randomised within sensible limits leading to a wide variety of final apperances.

Wrap Up

Hopefully this has been an interesting glimpse into what I hope will be a powerful system for synthetic formations, I've certainly learnt a lot about Lua and it's integration with C++ along the way which has been rewarding in itself, but feeling like the system I've created is open and flexible enough to produce interesting, realistic(ish) or even fantastical structures is satisfying.

There is even scope here to release a stand alone version of the viewer tool these screenshots are from so you too could experiment with creating architecture from Lua - as long as people can accept it's limitations of course

Anyone interested?